Across every industry, organizations are moving quickly to pilot AI. They are automating help desk workflows, summarizing contracts, and generating reports. Yet many of these efforts never reach full deployment. The reason is not a lack of technology. Models can reason, and tools can integrate. What truly holds progress back is a lack of trust. Leaders, customers, and regulators remain uncertain about how AI systems handle sensitive data, make decisions, and remain accountable. Building that trust is not a technical milestone but the foundation of AI readiness.

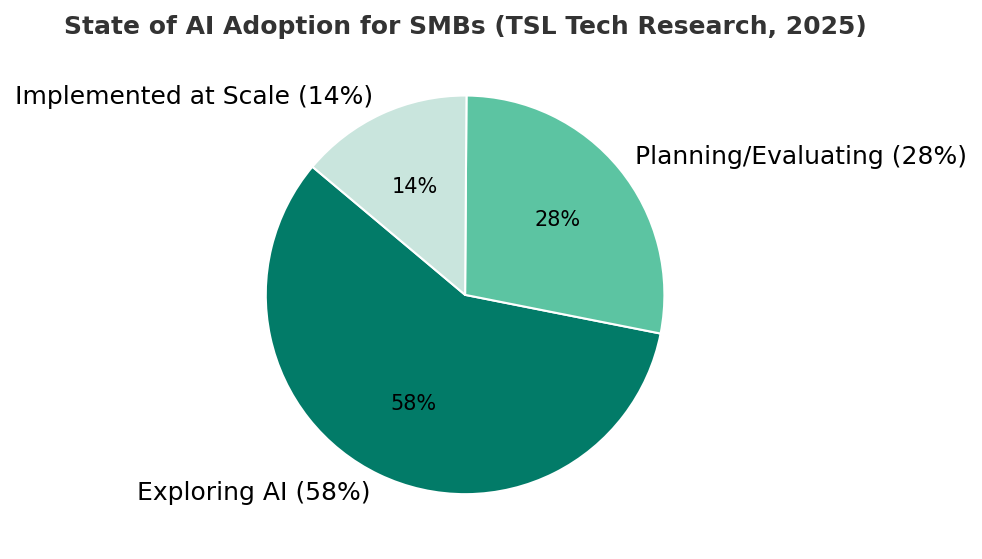

Most SMBs are experimenting with AI, few have crossed the trust threshold to full implementation.

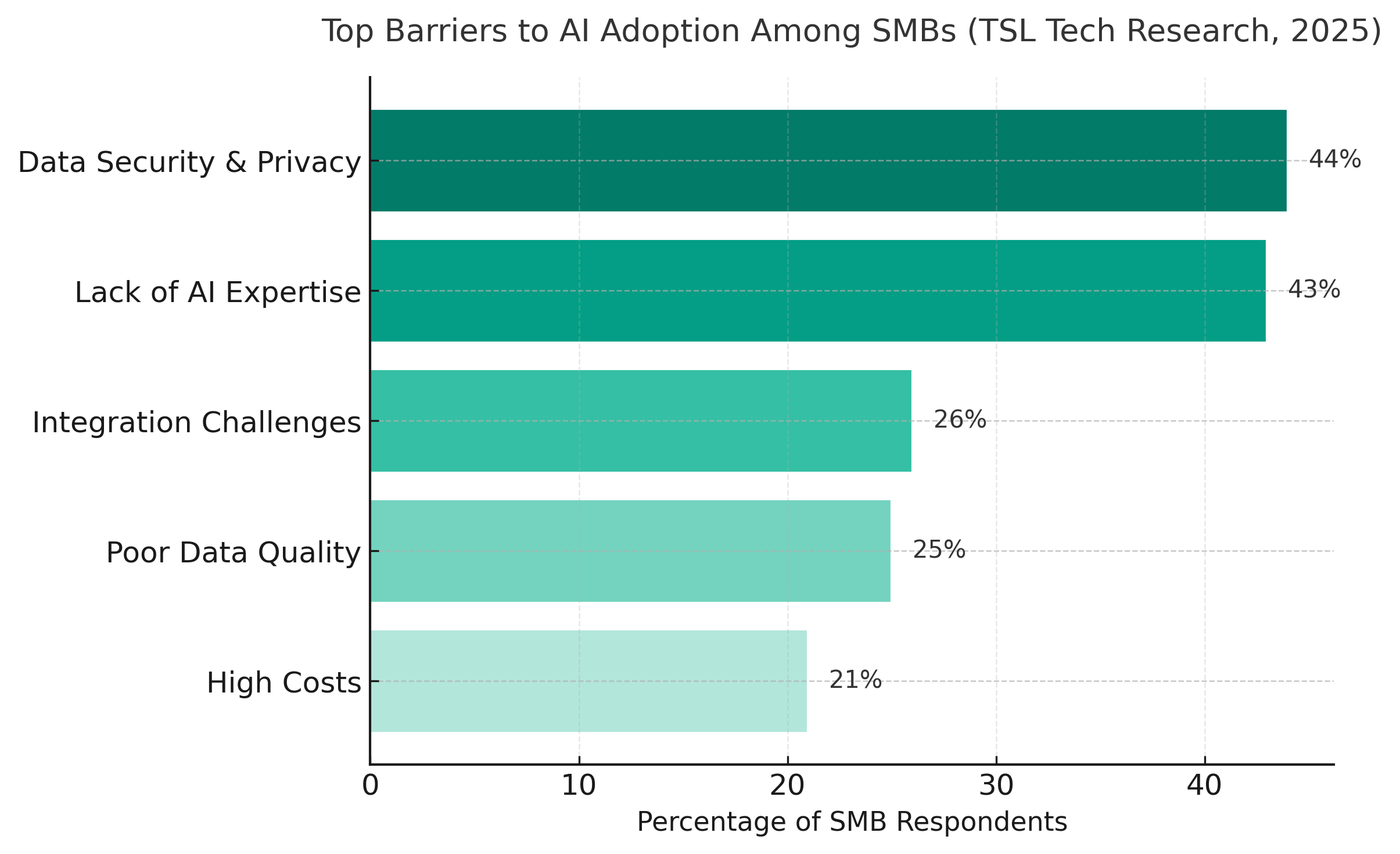

Security, skills, and integration aren’t separate; they’re a single governance challenge.

Trust is the New Infrastructure

In the cloud era, trust was built on firewalls and service-level agreements. In the AI era, trust depends on transparency, which means understanding how systems use data, maintaining control over who can act and how, and proving compliance with policies and laws. TooBZ defines AI readiness as the ability to innovate while preserving trust among customers, employees, and regulators.

Three pillars of trust readiness

- Transparency: Understand how models operate, what data they access, and where results are used or stored.

- Control: Record every action, assign precise access levels, and ensure all changes can be reviewed or reversed.

- Compliance: Ensure every outcome follows internal policy and external regulation, and maintain continuous proof of adherence.

Traditional Readiness vs Trust Readiness

| Dimension | Traditional Readiness | Trust Readiness |

|---|---|---|

| Technology Focus | Hardware and firewalls | AI and automation governance |

| Data Access | Centralized, limited visibility | Transparent, monitored usage |

| Security | Perimeter defense | Zero Trust and continuous validation |

| Governance | Manual policies, ad hoc audits | Policy-as-code and real-time evidence |

| Readiness Outcome | Operational uptime | Sustained innovation with compliance |

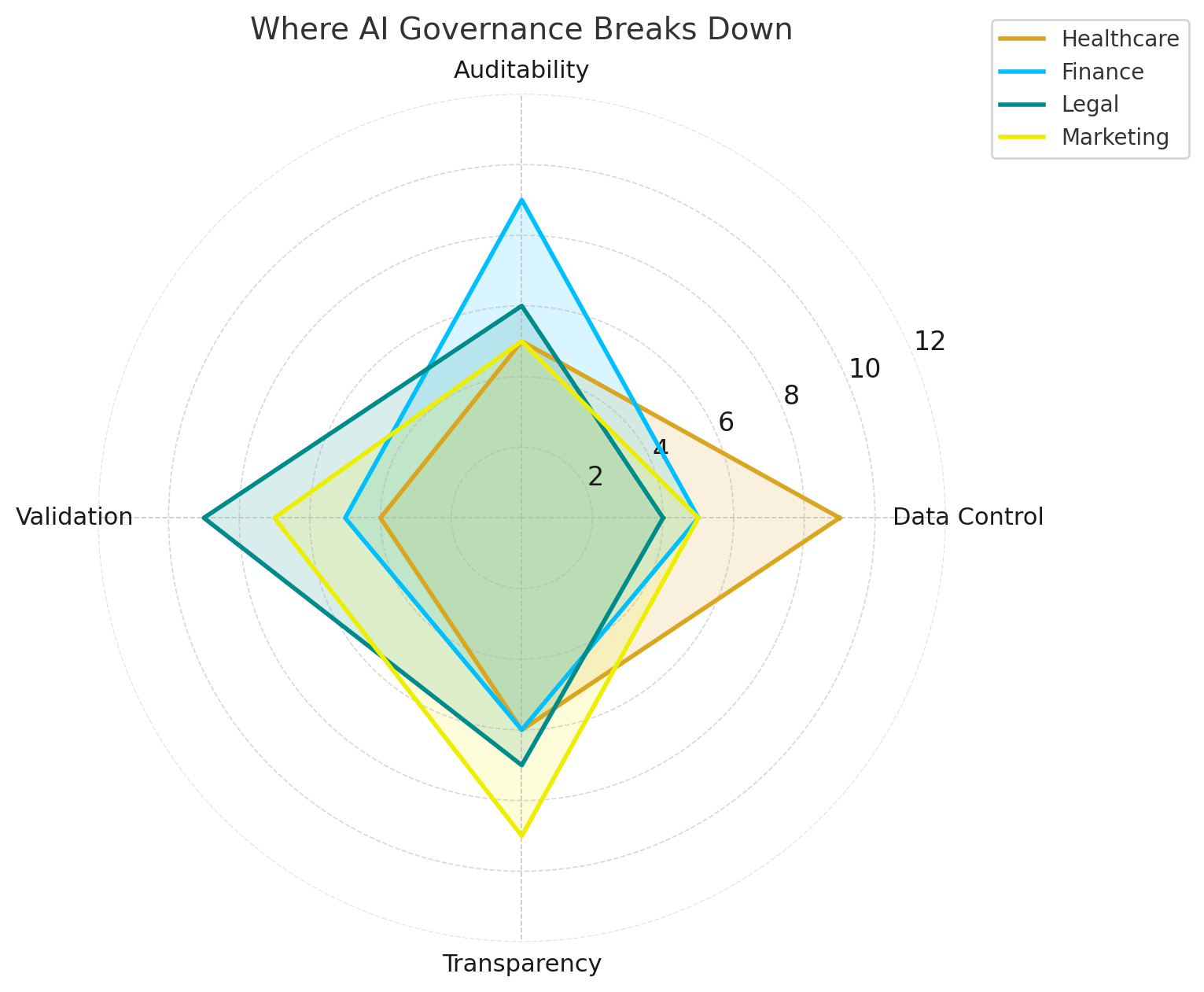

When Trust Breaks: Common Failure Patterns

Healthcare (Data Control)

It starts with good intentions. A clinician, running behind schedule, copies patient notes into a public chatbot to finish documentation more quickly. The information leaves the organization’s secure systems and enters an environment with no safeguards. By the time anyone notices, sensitive data is already outside the network’s control. What began as an attempt to save time becomes a breach of privacy. The issue is not the model but the absence of data control, no approved tools, no monitoring, no policy-as-code.

Finance (Auditability)

In the finance department, an AI assistant handles invoice approvals automatically. For months, the system performs flawlessly. Then an auditor asks for proof of who approved a six-figure payment. The records are incomplete, and no one can explain how the decision was made. The technology functioned as designed, but accountability disappeared. The real failure lies in auditability, the lack of clear logs, approvals, and oversight.

Legal (Validation)

Late one evening, a paralegal uses a language model to speed up a court filing. The AI produces well-formatted text filled with citations that appear credible. Confident, the team submits it to the court. Days later, they learn that none of the cases or quotes actually exist. The firm faces sanctions and embarrassment. The problem was not the AI’s creativity but the missing process of validation and human review before submission.

Marketing (Transparency)

The campaign looks perfect. The testimonials are glowing, the customer stories convincing, the faces friendly and relatable. Only later does the truth surface: every persona was synthetic, every quote generated by AI. Audiences feel deceived, and trust erodes overnight. The breakdown stems from a lack of transparency about how content was created and approved.

The Hidden Cost of Distrust

When AI initiatives lose momentum, the cost extends far beyond missed deadlines. Organizations face project delays, lost opportunities when buyers request proof of data protection, and rework to satisfy auditors or remediate compliance gaps. These disruptions erode confidence among employees, customers, and partners. Ironically, many companies increase their security budgets just to restore the trust that was lost, often doing so after the damage has already been done.

When trust is broken, time, opportunity, and capital are the first casualties.

The impact of distrust is measurable. In TooBZ’s experience, organizations that embed governance early in their AI projects consistently outperform those that treat it as an afterthought:

From Fear to Structure: What Comes Next

Trust isn’t an add-on; it must be designed. In What Guardrails Really Mean, we’ll move from the problem to the pattern: a practical framework that turns trust into structure, so pilots move faster, audits move smoother, and stakeholders shift from skepticism to sponsorship.

Preview topics: identity & access, data protections, workflow controls, responsible AI behavior, and continuous proof.

References

- TSL Tech Research (2025): State of AI Adoption for SMBs; IT Security Insights from 1,285 SMB Leaders; Top Ways to Control the Cost of IT Services.

- HubSpot (2025): AI-generated content usage & marketer concerns.

- MSP Growth Hacks (2025): “How MSPs Are Setting the Table for AI Adoption Among SMBs.”

- SmarterMSP (2025): “2025 Trends for MSP Marketing.”

- NIST SP 800-53; NIST SP 800-37 (RMF); NIST SP 800-207 (Zero Trust): referenced for general governance concepts.